I’m a long-time fan of visual management. Visualizing work helps to gather low-hanging fruits: it makes the biggest obstacles instantly visible and thus helps to facilitate the improvements. By the way, these early improvements are typically fairly easy to apply and have big impact. That’s why we almost universally propose visualization as a practice to start with.

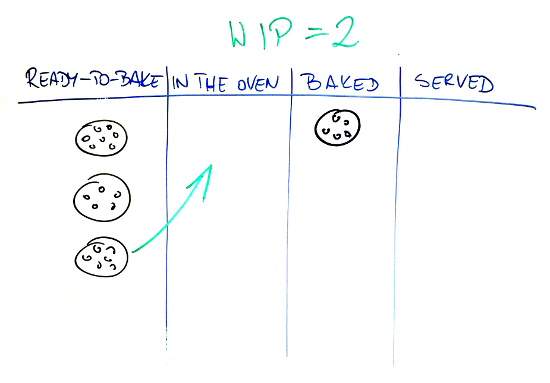

At the same time a real game-changer in the long run is when we start limiting work in progress (WIP). That’s where we influence the change of behaviors. That’s where we introduce slack time. That’s where we see emergent behaviors. That’s where we enable continuous improvements.

What’s frequently reported though, is that introducing WIP limits is hard for many teams. There’s resistance against the mechanism. It is perceived as a coercive practice by some. Many managers find it really hard to go beyond the paradigm of optimizing utilization. People naturally tend to do what they’ve always been doing: just pull more work.

How do we address that challenge? For quite some time the best idea I’ve got was to try it as an experiment with a team. Ultimately there needs to be team buy-in to make WIP limits work. If there is resistance against a specific practice, e.g. WIP limits, there’s not much point in enforcing it. It won’t work. However, why not give it a try for some time? There doesn’t have to be any commitment to go on after the trial.

The thing is that it usually feels better to have less work in progress. There’s not that much of multitasking and context switching. Cycle times go down so there’s more of sense of accomplishment. The pressure on the team often goes down as well.

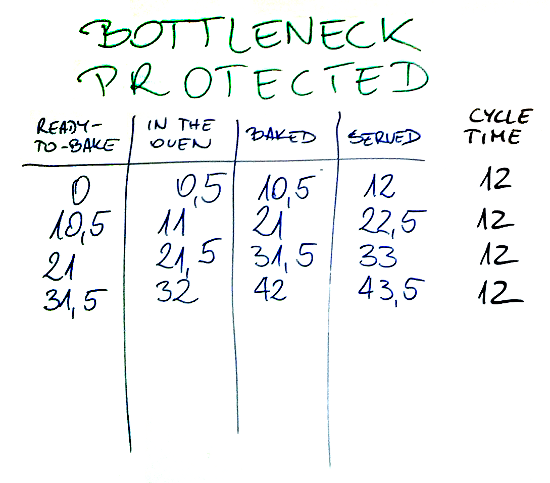

There’s also one thing that can show that the team is actually doing much better. It’s enough to measure start and end dates for work items. It will allow to figure out both cycle times (how much time it takes to finish a work item) and throughput (how many work items are finished in a given time window).

If we look at Little’s Law, or rather its adoption in Kanban context, we’ll see that:

It means that if we want to get shorter cycle time, a.k.a. quicker delivery and shorter feedback loops, we need either to improve throughput (which is often difficult) or cut work in progress (which is much easier).

We are then in a situation where we understand that limiting WIP is a good idea and yet realize that introducing such a practice is not easy. Is there another way?

One strategy that worked for me very well over years was to change the discussion around WIP limits altogether. What do we expect when WIP limits are in place? Well, we want people to pull less work and instead to focus on finishing items rather than starting them.

So how about focusing on these outcomes? It’s fairly simple. It’s enough to write a simple guidance. Whenever you finished work on an item first check whether there are any blockers. If there are attempt to solve them. If there aren’t any look at any unassigned items starting from the part of the board that’s closest to the done column (typically the rightmost part of the board). Then gradually go toward the beginning of value stream. Start a new item only when there’s literally nothing you can do with ongoing items.

If we took a developer as an example the process might look like this. Once they finish coding a new work item they look at the board. There is one blocked item. It just so happens that the blocker is waiting for a response from a client. A brief chat in the team may reveal that there’s no point in pestering the client for feedback on the ticket for now. At the same time it may be a good time to remind the client about the blocker.

Then the developer would go through the board starting at the point closest to the doneness. If the process in place was something like: development, code review, testing and acceptance by the client, the first place would be acceptance. Are there any work items where the client shared feedback, i.e. we need to implement some changes? If so that’s the next task to focus on. In fact, it doesn’t matter whether the developer was the author of the ticket but if there are any tickets that used to be his it may be input for prioritizing.

If there’s nothing to act upon in acceptance, then we have testing. Are there any bugs from internal testing than need to be fixed? Are there any tickets that wait for testing that the developer can take care of? Of course we have a century-old discussion about developers not willing to do the actual testing. I would however point that fixing bugs for the fellow developers is equally valuable activity.

If there’s nothing in testing that can be taken care of then we move to code review and take care of anything that’s waiting for code review or implement feedback from code review that’s been done already.

Then we move to development and try to figure out whether the developer can help with any ongoing work item. Can we pair with other team members? Or maybe there are obstacles where another pair of eyes may be useful?

Only after going through all the steps the developer moves to the backlog and pulls a new ticket.

The interesting observation is that in vast majority of cases there will be something to take care of. Just try to imagine a situation where there’s literally nothing that’s blocked, requires fixing or improvements and nothing that’s in waiting queue. If we face such a situation we likely don’t need to limit work in progress any further.

And that’s the whole trick. Instead of introducing an artificial mechanism that yields specific outcomes we can focus on these outcomes. If we can guide the team to adopt simple guidance for choosing tasks we effectively made them limit work in progress and likely with much less resistance.

Now does it matter that we don’t have explicit WIP limits? No, not really. Does it matter that the actual limits may fluctuate a bit more than in a case when the process has hard limits? Not much. Do we see actual improvements? Huge.

So here’s an idea. Don’t focus on practices. Focus on understanding and outcomes. It may yield similarly good or better results and with a fraction of resistance.