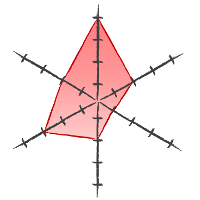

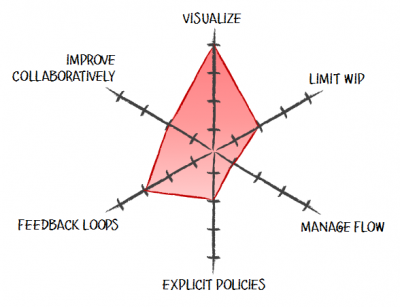

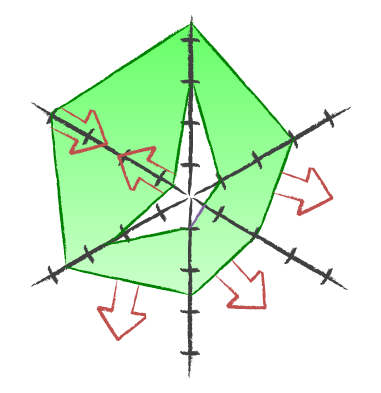

One of outcomes of Hakan Forss’ session on depth of Kanban practices at the Kanban Leadership Retreat was the use of radar charts to show the maturity of a Kanban implementation. The whole discussion started with the realization that different teams adopt Kanban practices in different orders, thus we need a tool to assess them somehow.

Radar charts, or spider charts, seem to be good tools for visualizing how well a team is doing. However, when you start using them, interesting things pop up.

Coming Up with Results

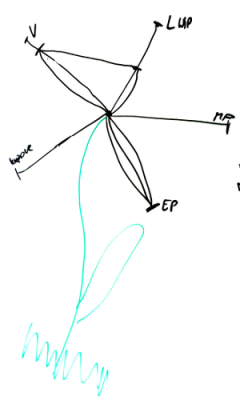

First, how exactly do you tell how mature an adoption of a specific practice is? How far are we on a scale from 0 to 5 with visualization? Why? What about limiting work in progress? Etc.

One of my teams decided to describe 0 as “doing nothing” and max as “where we think we would like to be.” With such an approach, a radar chart can be treated as a motivational poster – it shows exactly how much we still should do with our Kanban implementation. It also means that the team aims at a moving target – as time passes they will likely improve and thus set more ambitious goals.

There is also a drawback to this approach. Such an assessment is very subjective and very prone to gaps in knowledge. If I think that everything there is to be done about WIP limits is to set those numbers in each column on the board and avoid violating them, I will easily hit the max on the “limiting WIP” axis. Then of course I’ll award myself the Optimist of the Week and Ignorant of the Month prizes, but that’s another story.

On a side note: I pretty much expect that someone is going to come up with some kind of a poll with a bunch of questions that do the job for you and tell you how far you are with each practice. And, similarly to the Nokia Test, I think it will be a very mixed blessing with negatives outweighing positives.

Finding Common Results

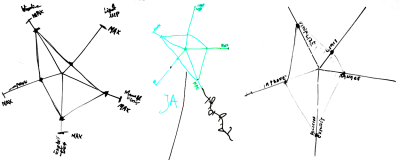

The second issue is about gathering collective knowledge from a team. People will likely differ in their judgment – one would say that visualization is really mature, while the other will state that there’s lot more to be done in there.

The obvious strategy is to discuss the areas where the differences are the biggest. However, it’s not a fancy flavor of planning poker so, for heaven’s sake, don’t try to make everyone agree on the same number. It is subjective after all.

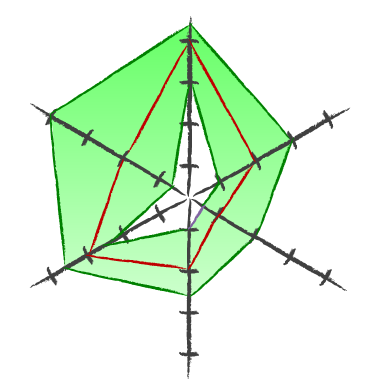

One more interesting trick that can be done is putting all the results on a single radar chart with min and max values creating the borders of an area. This area will tell you how your Kanban implementation is perceived.

With such a graph not only do you want to have this bagel spread as far as possible but also to have it as thin as possible. The latter may be even a more important goal in closer perspective as a wide spread of results means that team members understand the tool they use very differently.

Comparing Results between Teams

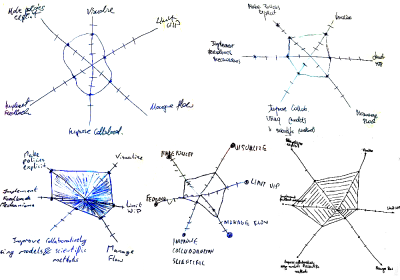

The third issue pops up when you compare graphs created by different teams. Let’s assume you have both issues above solved already and you have some kind of consistent way of judging maturity of Kanban practices. It is still very likely that different teams will follow different paths to Kanban adoption, thus their charts will differ. After all this is what launched the whole discussion in the first place.

It means, however, that you may draw very interesting conclusions from comparing the results of different teams. You don’t try to say which team is better and which needs more work. You actually launch discussions on how people are doing things and why they think they are good (or bad) at them. You enable collaborative learning.

As a bonus you can see patterns on a higher level. For example, people across the organization are doing pretty well with visualization, have very mixed outcomes in terms of managing flow and are not that good when it comes to limiting WIP. It can help you focus on specific areas with your coaching and training effort.

Besides, it is funny to see how a personal kanban maturity radar chart can look like.

To summarize, radar charts are nice visuals to show you where you are with your Kanban adoption, but they may, and should, be used as a communication enabler and a learning catalyst.

Subscribe RSS feed

Subscribe RSS feed Follow on Twitter

Follow on Twitter Subscribe by email

Subscribe by email

10 comments… add one

Hi

i like very much the approach and will give it a try.

But one question regarding your statement:

“On a side note: I pretty much expect that someone is going to come up with some kind of a poll with a bunch of questions that do the job for you and tell you how far you are with each practice. And, similarly to the Nokia Test, I think it will be a very mixed blessing with negatives outweighing positives.”

Please explain further why such a poll will be “negatives outweighing positives”?

@Alexander – The problem with tools such as Nokia Test is that people just count practices they do and not pay attention how they do it. One of canonical examples is unit testing. You can easily can achieve 100% coverage; you can even write tests first and still have very immature approach to unit testing.

At the same time, following spike and stabilize approach you can resign from writing unit test in a specific situation, which is a sign of high maturity.

How a poll can address that?

Then, you get what you measure. If you measure practices quantity and not quality, well, this is exactly what you get.The sad thing is such organizations will brag how awesome they are, while the truth is totally different.

That’s why I don’t like Nokia Test. Common sense beats 10 times out of 10.

Hi Pawel. Very good thoughts.

I have three answers:

1. Have you included the measurements into “manage flow”? I think it is an important dimension to this model.

2. How can you measure things like Limited WiP in a 5 levels scale? I can see only 3: no limits, limits and desobey and limits and obey.

2.1. Is this a fixed scale?

Thank you.

Best Regards!

@Celso – 1. Actually, measures and how teams use them is something that usually distinguish more mature teams from those less advanced. So yes, this is something important for me and also something I preach when working with different teams. I would, however, be far from enforcing a single interpretation of this. As David Anderson points maturity of Kanban is a matter of intent (and of a moment as well), so the scale will likely be evolving as a team gets more experience.

2. What if WIP are too loose (or too tight for that matter)? Or stalled for a long, long time? What if violating WIP limits happens but only occasionally? What if some WIP limits are working well, but others are not? What if there are (too many) buffers? What if blocked work isn’t included in WIP limits? What if expedite lane is abused? What if not all work goes through a board? What if items on a board aren’t updated thus limiting WIP is sometimes disconnected with reality? There are many dimensions that can be taken into consideration when thinking about limiting WIP. I believe this is part of Kanban that usually needs most work to fine tune it and most frequently moves people out of their comfort zones, thus this work is often deferred.

2.1. The scale can be whatever you want. It can be 0-100% it can have a few distinct stages. Use whatever works for you and your team. Note however, that it is really, really hard to distinguish 37% from 39%. It will be much easier to decide with a scale that is rather short.

Thanks for your explanation of your statement!

Good point Pawel, so how can we measure how deep we are to begin with, are there any standard set of questions. If I ask general question from team on scale of 1-5 its not going to be accurate.. please could you advise. thanks Barry

I agree that the polling questions don’t produce great results. Rally has had a version of his for Scrum for a long time with mixed results. Nokia’s has a few questions, so people interpret them badly. Rally’s has a lot of questions, so people miss nuances, or just get bored answering.

I wonder if experience Kanbaners could come up with questions that would help us assess teams more consistently. The kind of elaboration you did around WIP limits for Alexander is the direction I mean. I wouldn’t mean for this to be a self-assessment tool, which as you say suffers from subjectivity and lack of experience.

@Rick – First of all, I really missed you at KLRAT. Even more so since we’ve had quite a hot discussion around the subject.

One thing that we already have are the lists David shared in his presentation on depth of Kanban at LLKD13 (second part of the session). Another example is from Christophe Achouiantz. However, personally I like neither of these (as explained in a comment under the Christophe’s post). For me personally it is too much of “one size fits all” sort of approach and brings a picture of the Nokia test way to vividly.

I prefer to work on teams’ understanding of what Kanban is and then let them self-evaluate instead of focusing on pseudo-objective way of evaluating depth of Kanban implementation. After all, depth of Kanban implementation is never a goal per se for me. It is always helping a team to get better which doesn’t necessarily mean that the team has to go as deep as possible.

Hi Pawel, I’m writing a proposal on the use of Radar Charts as a mechanism for producing meaningful project status reports. Found your article as I was doing some literature review on this topic. Good article mate.

Cheers, Shim.

@Shim – Thanks. There’s one more post on the tool going out (hopefully) soon. The problem is it needs to be written first :)