There is one thing we take almost for granted whenever analyzing how the work is done. It is Little’s Law. It says that:

Average Cycle Time = Work in Progress / Throughput

This simple formula tells us a lot about ways of optimizing work. And yes, there are a few approaches to achieve this. Obviously, there is more than the standard way, used so commonly, which is attacking throughput.

A funny thing is that, even though it is a perfectly viable strategy to optimize work, the approach to improve throughput is often very, very naive and boils down just to throwing more people into a project. Most of the time it is plain stupid as we know from Brook’s Law that:

Adding manpower to a late software project makes it later.

By the way, reading Mythical Man-Month (the title essay) should be a prerequisite to get any project management-related job. Seriously.

Anyway, these days, when we aim to optimize work, we often focus either on limiting WIP or reducing average cycle time. They both have a positive impact on the team’s results. Especially cycle time often looks appealing. After all, the faster we deliver the better, right?

Um, not always.

It all depends on how the work is done. One realization I had when I was cooking for the whole company was that I was consciously hurting my cycle time to deliver pizzas faster. Let me explain. The interesting part of the baking process looked like this:

Considering that I’ve had enough ready-to-bake pizzas the first setp was putting a pizza into the oven, then it was baked, then I was pulling it out from the oven and serving. Considering that it was almost a standardized process we can assume standard times needed for each stage: half a minute for stuffing the oven with a pizza, 10 minutes of baking and a minute to serve the pizza.

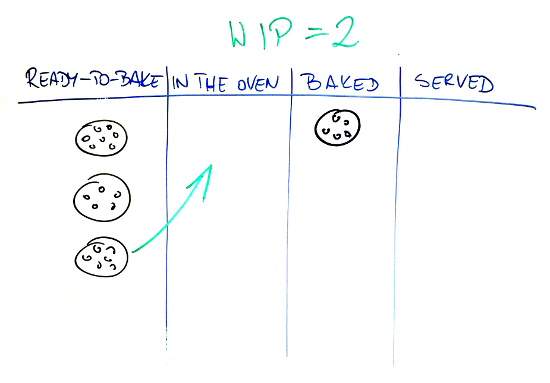

I was the only cook, but I wasn’t actively involved in the baking step, which is exactly what makes this case interesting. At the same time the oven was a bottleneck. What I ended up doing was protecting the bottleneck, meaning that I was trying to keep a pizza in the oven at all times.

My flow looked like this: putting a pizza into the oven, waiting till it’s ready, taking it out, putting another pizza into the oven and only then serving the one which was baked. Basically the decision-making point was when a pizza was baked.

One interesting thing is that a decision not to serve a pizza instantly after it was taken out of the oven also meant increasing work in progress. I pulled another pizza before making the first one done. One could say that I was another bottleneck as my activities were split between protecting the original bottleneck (the oven) and improving cycle time (serving a pizza). Anyway, that’s another story to share.

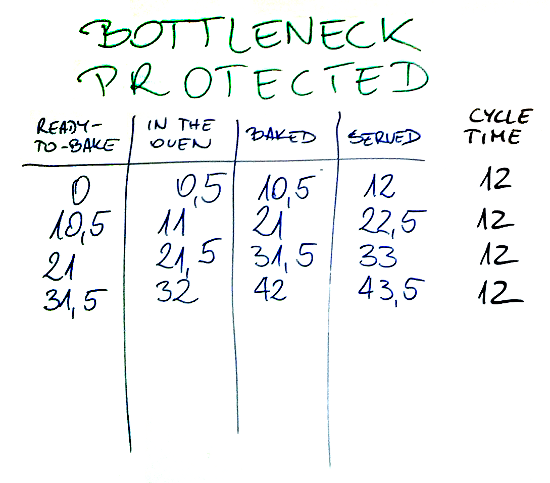

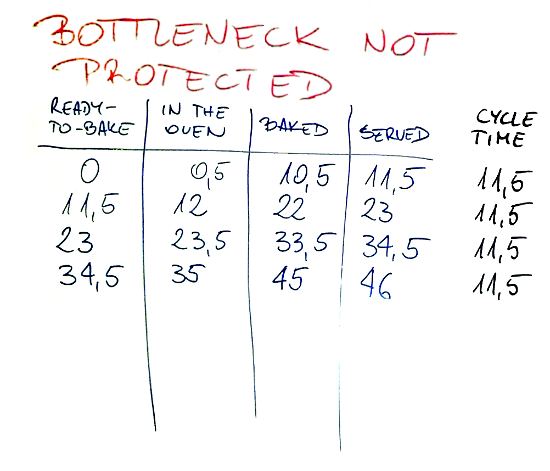

Now, let’s look at cycle times:

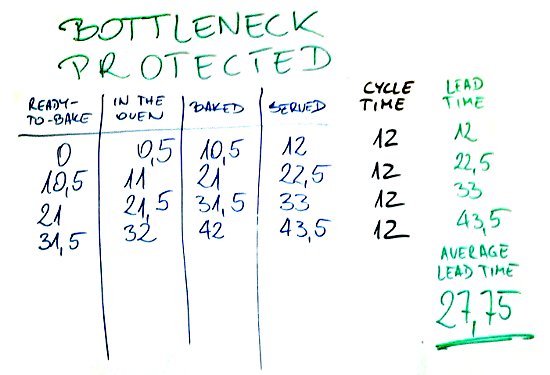

What we see on this picture is how many minutes elapsed since the whole thing started. You can see that each pizza was served a minute and a half after it was pulled out from the oven even though the serving part was only a minute long. It was because I was dealing with another pizza in the meantime. Average cycle time was 12 minutes.

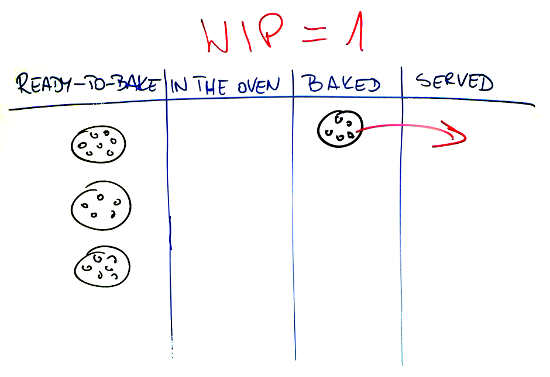

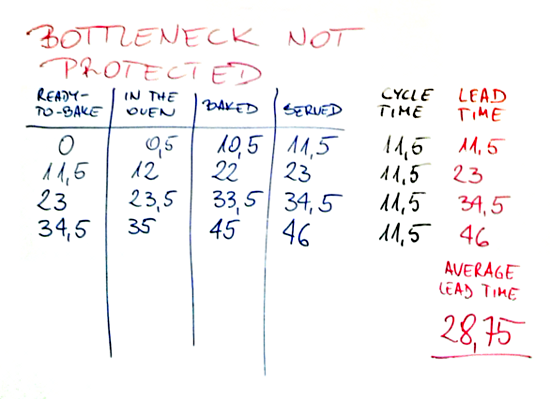

Now, what would happen if I tried to optimize cycle time and WIP? Obviously, I would serve pizza first and only then deal with another one.

Again, the decision-making point is the same, only this time the decision is different. One thing we see already is that I can keep a lower WIP, as I get rid of the first pizza before pulling another one in. Would it be better? In fact, cycle times improve.

This time, average cycle time is 11.5 minutes. Not a surprise since I got rid of a delay connected to dealing with the other pizza. So basically I improved WIP and average cycle time. Would it be better this way?

No, not at all.

In this very situation I’ve had a queue of people waiting to be fed. In other words the metric which was more interesting for me was lead time, not cycle time. I wanted to optimize people waiting time, so the time spent from order to delivery (lead time) and not simply processing time (cycle time). Let’s have one more look at the numbers. This time with lead time added.

This is the scenario with protecting the bottleneck and worse cycle times.

And this is one with optimized cycle times and lower WIP.

In both cases lead time is counted as time elapsed from first second, so naturally with each consecutive pizza lead times are worse over time. Anyway, in the first case after four pizzas we have better average lead time (27.75 versus 28.75 minutes). This also means that I was able to deliver all these pizzas 2.5 minutes faster, so throughput of the system was also better. All that with worse cycle times and bigger WIP.

An interesting observation is that average lead time wasn’t better from the very beginning. It became so only after the third pizza was delivered.

When you think about it, it is obvious. Protecting a bottleneck does make sense when you operate in continuous manner.

Anyway, am I trying to convince you that the whole thing with optimizing cycle times and reducing WIP is complete bollocks and you shouldn’t give a damn? No, I couldn’t be further from this. My point simply is that understanding how the work is done is crucial before you start messing with the process.

As a rule of thumb, you can say that lower WIP and shorter cycle times are better, but only because so many companies have so ridiculous amounts of WIP and such insanely long cycle times that it’s safe advice in the vast majority of cases.

If you are, however, in the business of making your team working efficiently, you had better start with understanding how the work is being done, as a single bottleneck can change the whole picture.

One thought I had when writing this post was whether it translates to software projects at all. But then, I’ve recalled a number of teams that should think about exactly the same scenario. There are those which have the very same people dealing with analysis (prior to development) and testing (after development) or any other similar scenario. There are those that have a Jack-of-all-trades on board and always ask what the best thing to put his hands on is. There also are teams that are using external people part-time to cover for areas they don’t specialize in both upstream and downstream. Finally, there are functional teams juggling with many endeavors, trying to figure out which task is the most important to deal with at any given moment.

So as long as I keep my stance on Kanban principles I urge you not to take any advice as a universal truth. Understand why it works and where it works and why it is (or it is not) applicable in your case.

Because, after all, shorter cycle times and lower WIP limits are better. Except then they’re not.

Subscribe RSS feed

Subscribe RSS feed Follow on Twitter

Follow on Twitter Subscribe by email

Subscribe by email

3 comments… add one

Very well explained! I thoroughly enjoyed reading this! Cheers!

Great post, you hooked me as soon as I saw pizza. I have a scenario just like this at the moment, 4 clients all wanting software but there is an in controllable bottleneck (..the clients response time). I could do what I usually do an limit wip but instead I’m progressing work for all 4 of them in the hope (as with your pizza example) that the overall lead time for all four asks will be much less than if I were to focus on one at a time. It’s not ideal but the system has constraints and you have to do the best you can based on those constraints. Worst case could even be to loose one client if I limited wip to one.

@Rob – That’s a tricky topic. I don’t say: make you WIP through the roof in order to exploit bottleneck. It would be stupid. It would be an equivalent of preparing 4 unbaked pizzas to wait only because the oven is a bottleneck. Not really the best strategy.

The part of protecting the bottleneck is making sure we never starve it from the stuff to process. The other part, though, is making sure it isn’t overloaded with the work waiting so that the reaction time at a bottleneck station is rapid enough.

This is not to say that I have an answer how many streams of work with a client you should have ongoing. It’s simply saying that there likely is an optimum that is at neither of the extremes.