Have you ever cooked for twenty people? If you have you know how different the process is when compared to preparing a dinner just for you and your spouse. A few days ago I was preparing lunch for folks in my company and I’m still amazed how naturally we use concepts of pull, WIP limits, bottlenecks and slack when we are in situations like this.

I can’t help but wonder: why the hell can’t we use the same approach when dealing with our professional work?

OK, so here I am, cooking 15 pizzas for a small crowd.

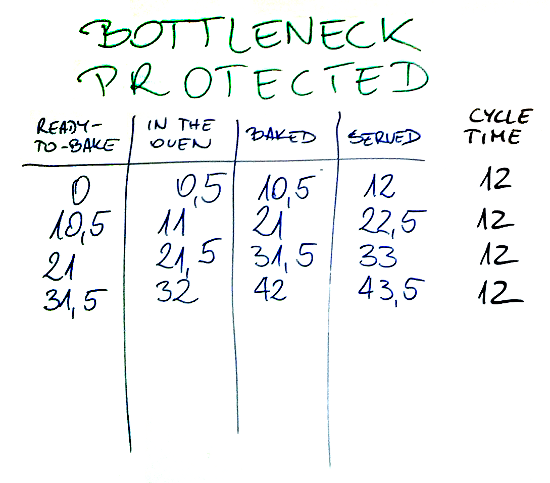

Bottlenecks

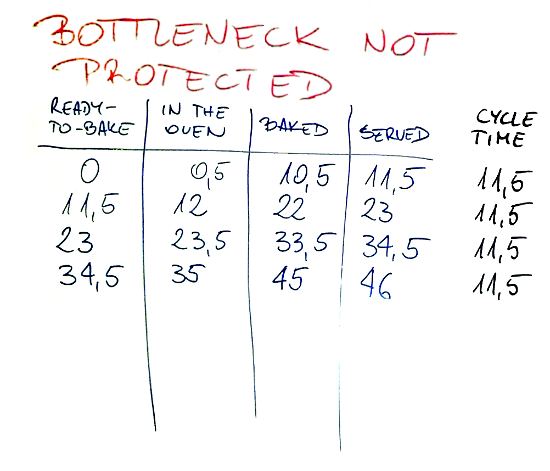

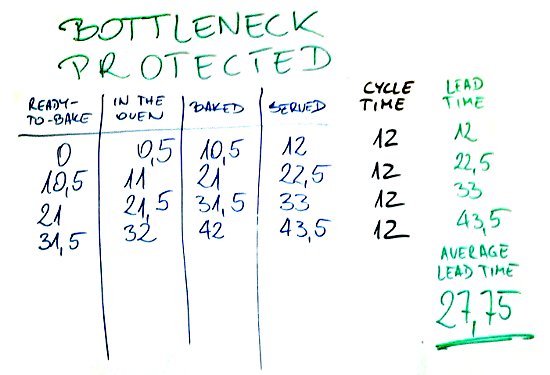

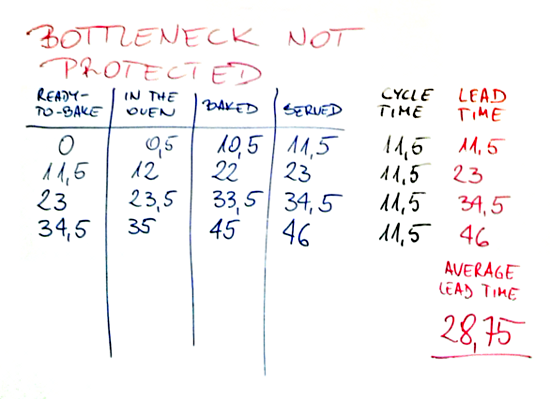

If you read Eli Goldratt’s The Goal you know that if you want to make the whole flow efficient you need to identify and protect bottlenecks. Having some experience with preparing pizzas for a few people, I easily guessed that the bottleneck would be an oven.

The more interesting part is how, knowing what is the bottleneck, we automatically start protecting it. The very next thing I was doing after taking a pizza out from the oven was putting another one in. If I decided to serve the pizza first I would be making my bottleneck resource (the oven) idle, which would affect the whole process and its length.

Interestingly enough, protecting the bottleneck in this case resulted in longer cycle time and, with the first delivery, worse lead time too. That’s the subject for another story though.

The lesson here is about dealing with bottlenecked parts of our processes. One of the recent conversations I’ve had was about bringing more developers into a project where business analysis was a bottleneck. It would be like hiring waiters to help me serving pizzas and expecting it would make the whole process faster.

It’s even worse if you don’t know what your bottleneck is. In the story with business analysis I’ve mentioned the team learned where the problem is only after some time into the project. Before that they would actually be willing to hire more waiters and would expect that would improve the situation.

WIP Limits

Fifteen pizzas and one cook. If I acted as an average software development team I would prepare dough for all pizzas, then move to a tomato sauce, then to other ingredients. Three hours later I would realize that, because of a system constraint, I can’t bake in batch. I would switch my efforts to deal with a hungry and angry crowd focusing more on dealing with their dissatisfaction than on delivering value. Fortunately, eventually I would run a retrospective where I would learn that I made a mistake with the baking part so I would file a retro summary into a place no one ever looks again and congratulate myself a heroic effort of dealing with hungry clients.

Instead I limited amount of work invested into preparing dough and ingredients. I prepared enough to keep the oven running all the time.

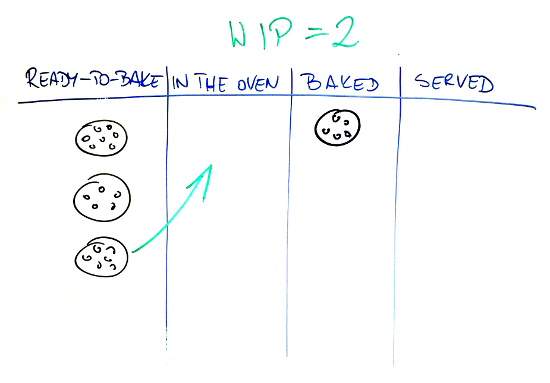

Well, actually I prepared more. I started with WIP limit of 6 pizzas, meaning that I had 6 ready-to-bake pizzas when the oven was ready. Very soon I realized one obvious and two more, less obvious, issues with such a big WIP limit.

First, 6 pizzas take up a lot of space. Space which was limited. Even more so, when more people popped up in the kitchen waiting for their share. This is basically a cost of inventory. Unfortunately, in the software industry we deal with code so we don’t really see how it stacks up and take up space, until it’s too late and fixing a bug becomes a dreadful task no one is willing to undertake.

If only we had to find a place to store tiny physical zeros and ones for each bit of our code… The industry would rock and roll.

The other two issues weren’t that painful. If you keep unbaked pizza too long it’s not that good as it’s a bit too dry after baking. I also realized that I could easily manage to prepare new pizzas at a pace that doesn’t require such a big queue in front of the oven. I could prepare better (fresher) product and it still wouldn’t affect the flow.

So I quickly reduced my queue of pizzas in front of the oven to 4, 3 and eventually 2. Sure, it changed how I worked, but it also made me more flexible. I didn’t need so much space and could react to special requests pretty flexibly.

Surprisingly enough, WIP limits in a kitchen seem so intuitive. It’s often more convenient to work in small batches. Such an approach helps to focus on the bottleneck. If you’re dealing with physical inventory you also virtually see the cost of excessive inventory. Unfortunately, with code it’s not that visible even though it’s a liability too.

It doesn’t mean that the whole mechanism changes dramatically. Much unfinished work increases multitasking, inflicts a cost of context switching, lengthens feedback loops. It just isn’t that visible, which is why we don’t naturally limit work in progress.

Slack Time

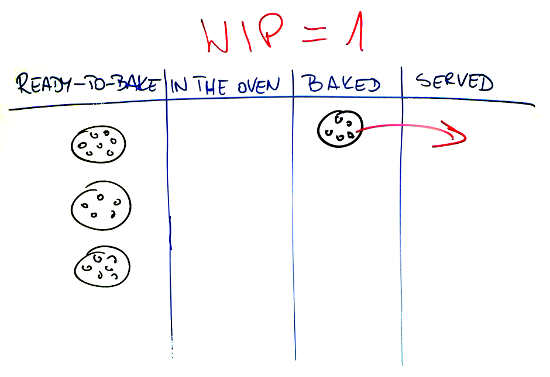

When we are talking about WIP limits we can’t forget about slack time. Technically I could prepare an infinite queue of ready-to-bake pizzas in front of the oven. Of course no mentally healthy cook would do this.

Anyway, when I started limiting my pizzas in progress I was facing moments when, in theory, I could have been preparing another one but didn’t. I didn’t, even when there was nothing else to be done at the moment.

A canonical example of slack time.

I used my slack time to chat with people, so I was happier (and we know that happy people do a better job). I got myself a coffee so I improved my energy level. I also used slack to rearrange the process a bit so my work was about to become more efficient. Finally, slack time was an occasion to check remaining ingredients to learn what pizzas I can still deliver.

In short I was doing anything but pushing more work to the system. It wouldn’t help anyways as I was bottlenecked by the oven and knew my pace well enough to come up with reasonable, yet safe, WIP limits which were telling me when I should start preparing the next pizza.

There are two lessons for us here. First, learn how the work is being done in your case. This knowledge is a prerequisite to do reasonable work with setting WIP limits. And yes, the best way to learn what WIP limits are reasonable in a specific case is experimenting to see what works and what allows to keep the pace of the flow.

Second, slack time doesn’t mean idle time. Most of the time it is used to do meaningful stuff, very often system improvements that result in better efficiency. When all people hear from my argument for slack time is “sometimes it’s better to sip coffee than to write code” I don’t know whether I should laugh or cry. It seems they don’t even try to understand, let alone measure, the work their teams do.

Pull

And, finally, pull principle. As we already know the critical part of the whole process was the oven, let’s start there. Whenever I took out a pizza from the oven it was a signal to pull another pizza into the oven. Doing this I was freeing one space in my queue of pizzas in front of the oven. It was a pull signal to prepare another one. To do this I pulled dough, tomato sauce and the rest of ingredients. When I ran out of any of these I pulled more of them from fridge.

Pull all over the place. Getting everything on demand. I was chopping vegetables or opening the next pack of salami only when I needed them. There were almost no leftovers.

Assuming that I could replenish almost every ingredient during the time a pizza was being baked, I was safe. I could even base on an assumption that it’s close to impossible that I run out of all the ingredients at the same time. And even then I had a buffer of ready-to-bake pizzas.

The only exception was dough as preparing dough took more time. Dough was my epic story. This part of the work was common for a bunch of pizzas all derived from the same batch of dough. Same like stories derived from an epic. In this case I was just monitoring the inventory of dough so I could start preparing the next batch soon enough. Again, there was a pull signal but it was a bit different: there are only two pieces of dough left; I should start preparing another batch so it would be ready once I run out of the current one.

The lesson about pull is that we should think about the work we do “from right to left.” We should start with work items that are closest to being done and consider how we can get them closer to completion. Then, going from there, we’ll be able to pull work throughout the whole process as with each pull we’ll be creating free space upstream.

Once we deploy something we create free space so we can pull something to acceptance testing. As a result we free some space in testing and pull features that are developed, which makes it possible to pull more work from a backlog, etc.

When using this approach along with WIP limits we don’t introduce extensive amount of work to the system and we keep our flow efficient.

Once we learn that earlier stages of work may require more time than later ones we may adjust pull signals and WIP limits accordingly so we keep the pace of the flow.

Summary

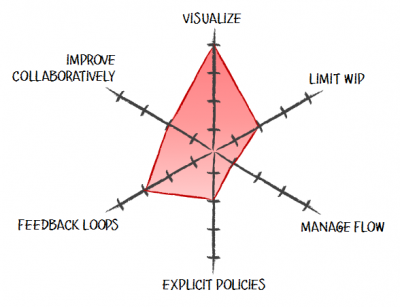

I hope the story makes it easier to understand basic concepts introduced by Kanban. Actually, I’d say that if software was physical people would understand concepts of flow, WIP limits, pull or protecting bottlenecks way easier. They would see how their undelivered code clutter their workspace, impact their pace and affects their flow of work.

So how about this: ask yourself following questions.

Where is the oven in your team?

Who is working on this part of the process?

Do you protect them?

How many ready-to-bake pizzas do you have typically?

How many of these do you really need?

What do you do when you can’t put another pizza into the oven?

What kind of space do your pizzas occupy?

Do your pizzas taste the same, no matter how long they are queued?

Do you need all the ingredients prepared up front?

How much of ingredients do you typically have prepared?

How do you know whether you need dough and when you should start preparing it?

Look at your work from this perspective and tell me whether it helps you to understand your work better. It definitely does in my case, so do expect further pizza stories in the future.